CSL UROP Project: Hexapod Robot

A comprehensive ROS2-based hexapod robot implementation featuring LiDAR-based mapping, AprilTag tracking capabilities, and manual control interface. The system integrates autonomous navigation with visual marker following and remote operation functionality.

Project Background

This project was conducted as part of the Undergraduate Research Opportunity Program (UROP) at City Science Lab @ Taipei Tech, in collaboration with MIT Media Lab. While I had prior robotics experience, this marked my first venture into legged robotics and ROS2 integration. The project provided an excellent opportunity to develop a deeper understanding of Simultaneous Localization and Mapping (SLAM) and autonomous navigation systems.

ROS2 Implementation & Control Interface

The initial phase involved developing Python-based control systems for the hexapod’s movement, including linear and angular motion control along with stance management. I subsequently implemented a ROS2 node architecture to handle cmd_vel and joy topic subscriptions, enabling robot control through ROS2’s Data Distribution Service (DDS).

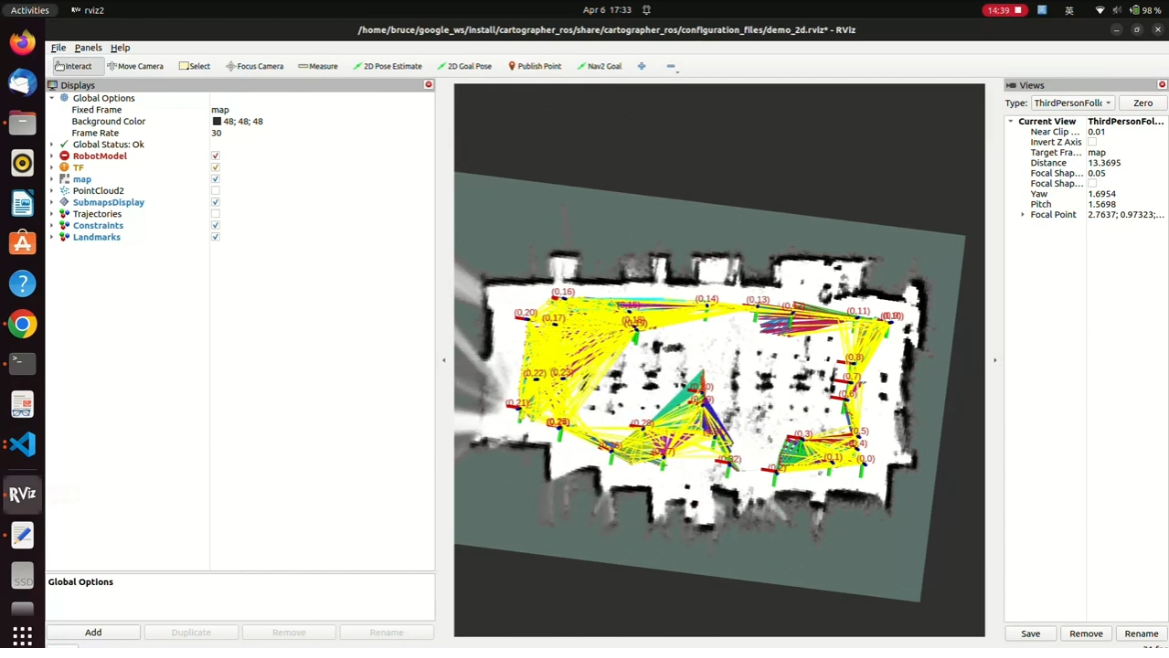

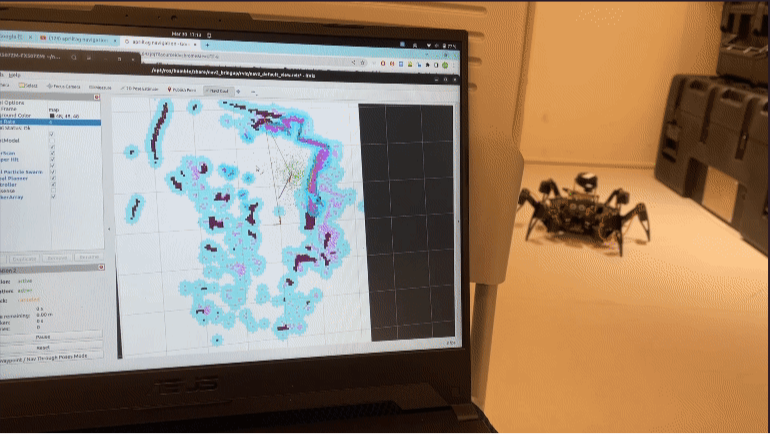

SLAM Integration

The SLAM implementation presented unique challenges, as many conventional algorithms rely on sensor fusion for optimal odometry estimation. Through extensive testing of various solutions including Lio-SAM, Gmapping, and Cartographer, I selected Cartographer as the most suitable option due to its robust performance without requiring external odometry sources.

Navigation System

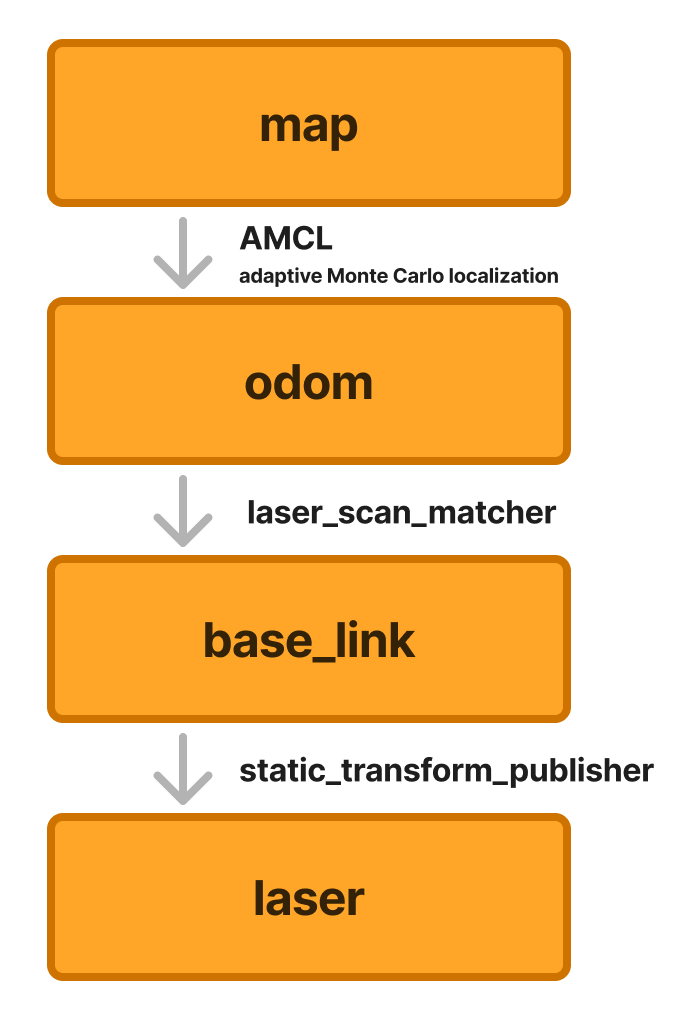

The navigation implementation required establishing a comprehensive TF tree for ROS2’s Navigation2 framework. Key components include:

- AMCL: Adaptive Monte Carlo Localization for map-to-odometry transformations.

- Laser Scan Matcher: LiDAR-based odometry calculation.

- Static Transform Publisher: Defining spatial relationships between frames.

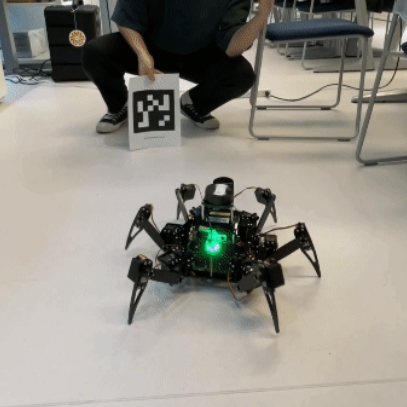

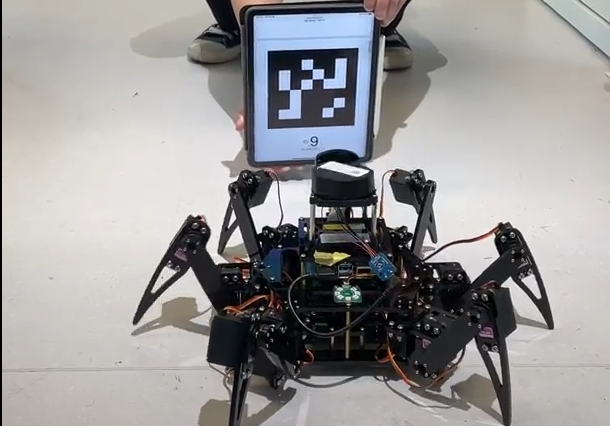

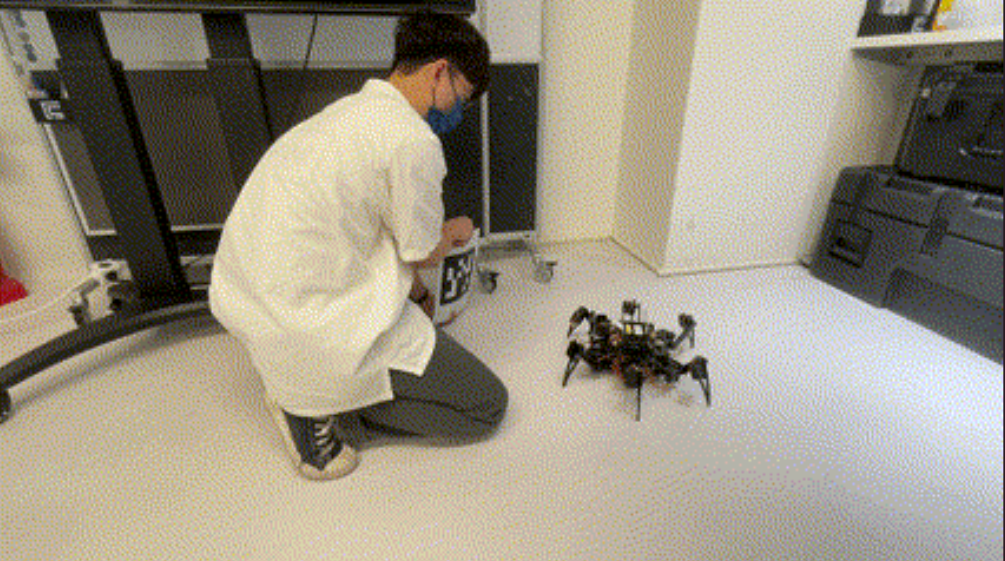

AprilTag Integration

To enhance the robot’s capabilities, I implemented AprilTag-based localization and tracking. The system maintains a specified relative position to detected AprilTags, enabling dynamic target following behavior.

System Integration

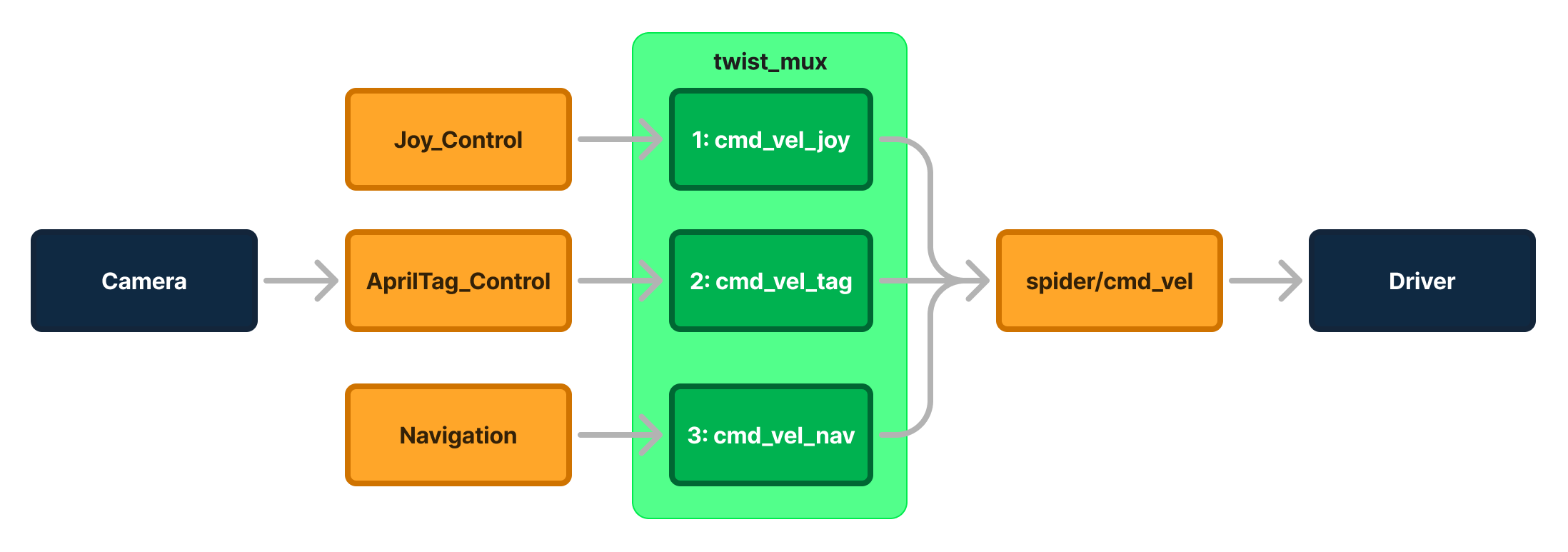

The final implementation incorporated all developed functionalities through a priority-based control system using the twist_mux node to manage multiple cmd_vel sources:

- Priority 1: Joystick control (highest priority)

- Priority 2: AprilTag following behavior

- Priority 3: Autonomous navigation

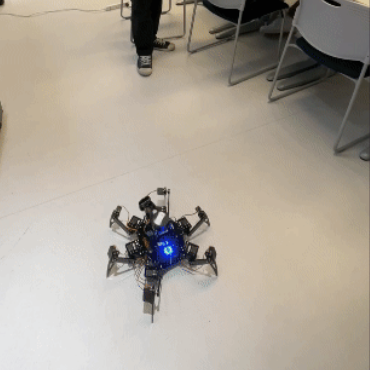

The robot’s operational state is indicated through LED feedback: Green for standard operation, Blue for active navigation, and back to Green upon completion.